For the use of cache accesses, each and every main memory address can be seen with three fields.The performance of direct mapping is directly proportional to the Hit ratio. The address space is divided into two parts, mainly index field and the tag field and the main memory stores the rest. If in case, a line is already taken by a memory block while a new block requires to be loaded then the old block would be trashed.

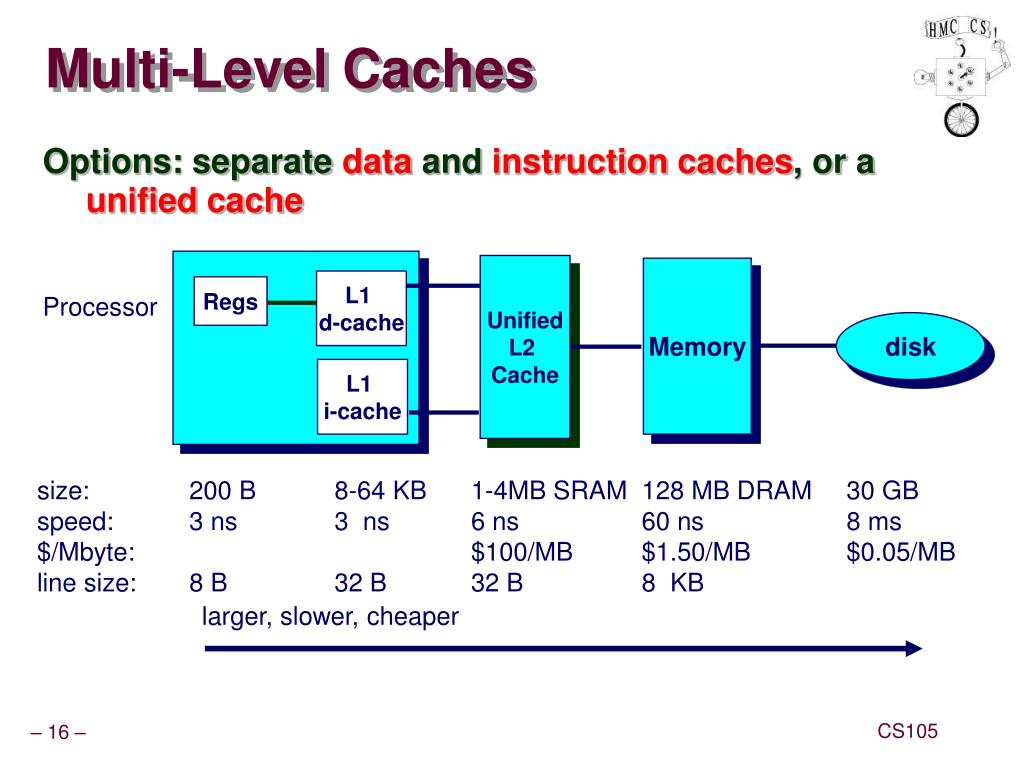

Each memory block is assigned to a particular cache line in direct mapping. In direct mapping, each block of the main memory is mapped to one possible cache line.It is generally placed between the primary cache and the rest of the memory. The secondary cache is referred as the level 2 cache and is also placed on the processor chip.It is always placed on the processor chip. A primary cache is small with an access time comparable to processor registers.A mapping function is used to correspond the main memory blocks to those memory blacks in cache memory.Generally, a reasonable number of blocks can be stored by the cache memory at any particular time, but the main memory has a higher number of memory blocks as compared to cache memory.

For example, if the processor had to receive the content of the data which was in random access memory location 37 then it would look for those content in cache memory which is called a cache hit. While checking the cache memory, the processor will come through a cache hit or cache miss. While the processor initiates the memory read, the first thing it will do is to check the cache memory first.If in case, the processor is processing data from the locations 0-32, so cache memory would copy the content of the locations from 33-64 as it would be expecting that these locations would be required next. For simplifying the process, it works on the concept that the major programs store the data in a sequential order. Cache memory also copies the some of the content of data held by the random access memory.The process to refresh the random access memory means that it will take longer for retrieving data from the main memory. There is no need to refresh static random access memory as it is required to do in in dynamic random access memory. Using static random access memory means that the access time is low resulting in fast executions while retrieving data from the cache memory as compared to the computer’s random access memory.Cache memory also stores the instructions which may be required by the processor next time, as it helps in retrieving the information faster as compared to instructions held by random access memory (RAM).Static Random Access Memory (RAM) is used in Cache memory on the other hand, main memory uses dynamic Random Access Memory (RAM).Cache memory is faster than main memory as a result of two major reasons which are:.

In a central processing unit, there are several independent caches available for storing the instructions and data. Cache memory is a faster and smaller memory which is used for storing the copies of data which are used frequently from the main memory locations.

#CACHE MEMORY PICTURES SOFTWARE#

Web development, programming languages, Software testing & others

#CACHE MEMORY PICTURES FREE#

Then overriding fetch event would suppress network and file reads with content served from this global object.Start Your Free Software Development Course Using File Api, resources can be read and stored into object in memory. If you want your files to be served from memory overriding default mechanism, you can implement your own Service Worker. If global memory usage reaches some specified threshold then filesystem backend is used. What is cached are fonts, images and scripts. Also the current renderer cache gets the most share. Which one is used depends on limit set globally for caches how much memory they can take. The caches and cache storage would be taken from disk again.īlink uses Http Cache as backend in two modes of creation - in memory and simple (filesystem). To gracefully handle network connection failures you could use Service Workers. When requested for the first time cache is overwritten. Generally caches could be divided into:Įvery request that is made over the network is proxied by HTTP Cache adhering to RFC. At the core there is HTTP (Browser) cache - a backend for other caching mechanisms. Chrome implements caches at many levels of abstraction.

0 kommentar(er)

0 kommentar(er)